YouTube - Three Strikes for Thee, Never for Me: How YouTube Enables Abuse by Protecting Repeat Offenders

The Platform Accountability Files — Vol. I.

YouTube tells the world it has rules.

Community Guidelines.

Harassment Policies.

Copyright Policies.

Misinformation Policies.

The famous “Three Strikes System.”

On paper, it all looks disciplined. Fair. Neutral. Systemic.

In reality?

YouTube enforces its rules the same way a corrupt small-town sheriff enforces traffic laws: selectively, inconsistently, and always in the interests of the people it protects — not the public.

For normal users, a single misstep triggers demonetization, deletion, or a channel wipeout.

For favored creators — especially those generating controversy, drama, or ad revenue?

YouTube suddenly becomes blind, deaf, and terminally confused.

This isn’t a glitch. It’s a business model.

I. The Three Strikes Illusion

YouTube’s “Three Strikes Policy” is one of the best-known enforcement mechanisms on the internet.

Strike 1: Warning.

Strike 2: Penalty.

Strike 3: Channel termination.

Sounds simple. Sounds fair. Except there’s one problem:

YouTube doesn’t actually apply it to abusers, doxxers, serial harassers, coordinated smear campaign channels, or repeat copyright infringers.

Those creators routinely:

violate rules,

evade copyright,

use defamatory thumbnails,

attack private individuals,

monetize dogpiling,

fabricate allegations,

stir harassment mobs,

rack up report after report…

…and remain untouched.

Meanwhile, creators who criticize those same abusers can be punished instantly with:

“misleading metadata,”

“reused content,”

“copyright issues,”

“harassment,”

or broad “community guidelines” violations.

Your violation is “content enforcement.” Their violation is “engagement.”

YouTube polices behavior only when it’s not profitable.

II. The Rise of the Repeat Offender Channel

There is now an identifiable species on YouTube:

The Repeat Offender Channel (ROC).

These channels almost always share the same traits:

They skirt the line between commentary and targeted harassment

They misuse copyrighted images for thumbnails

They recycle the same outrage cycle to stay afloat

They rely on drama-based revenue

They cultivate para-social minions to attack their targets

They mass-report anyone who criticizes them

They weaponize YouTube’s broken enforcement to silence opposition

In many cases, these creators will openly brag:

“I’ve beaten three copyright strikes.”

“I know how to get around fair use.”

“YouTube won’t touch me.”

Unfortunately, they’re not wrong.

YouTube’s enforcement failure is so predictable that bad actors treat it as a feature of the platform.

When abuse is profitable, policy becomes optional.

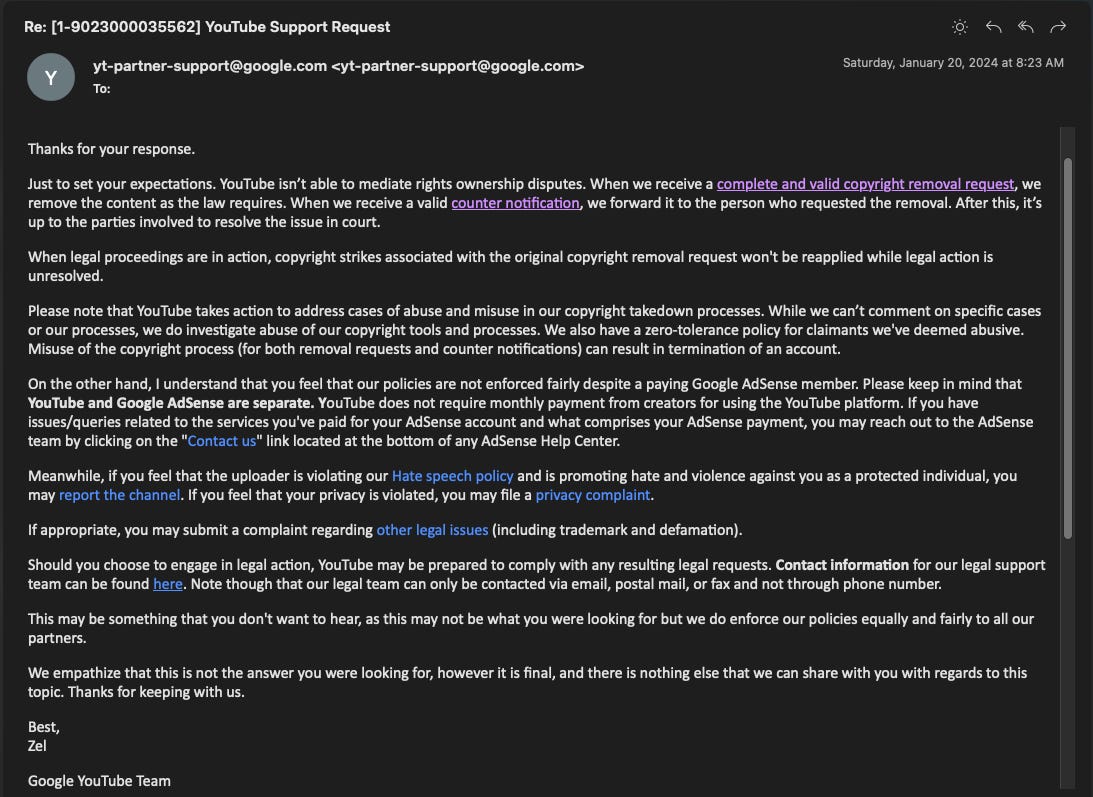

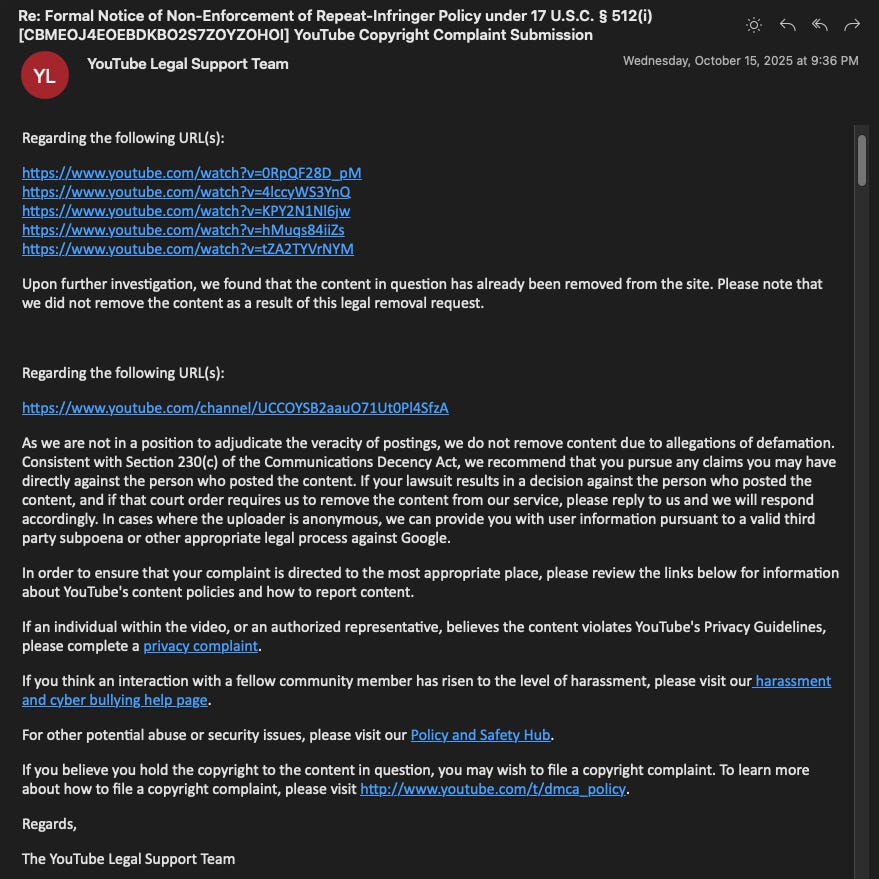

III. The Copyright Paradox: Enforcement for the Wrong People

YouTube presents itself as a defender of copyright owners — a platform that swiftly removes stolen material.

In reality?

YouTube vigorously enforces copyright when the claimant is a corporation. It ignores copyright when the claimant is an individual.

Creators who use someone’s image, likeness, or original footage for:

harassment

smear campaigns

monetized attacks

or defamatory thumbnails

can remain untouched for months or years.

But upload a movie clip from Disney? It’s gone before you blink.

Use your own copyrighted photograph to report a channel misusing it?

YouTube may:

demand more proof,

reject the claim without explanation,

or require a court order — even when the law does not.

One rule for corporations. One rule for creators. And one rule for the abusers YouTube quietly protects.

IV. How Abusive Channels Survive

Repeat offenders survive because YouTube’s systems are designed for:

✔ scalability

✔ automation

✔ deniability

—not accountability.

The loopholes are endless:

1. Thumbnail Abuse

Creators use copyrighted images, faces, and private photos with impunity.

2. “Transformative” Misuse

Harassers hide behind a deliberately misunderstood interpretation of “fair use.”

3. Desynchronization

YouTube treats harassment in video, comments, thumbnails, and community posts as separate issues.

Abusers exploit this.

4. Reporting Blind Spots

YouTube’s reporting tools don’t match policy language.

The platform technically has rules prohibiting:

targeted harassment,

personal attacks,

malicious falsehoods,

doxxing,

and incitement.

But the reporting menu does not.

5. Revenue Armor

If a channel generates decent ad revenue, enforcement becomes “complex.”

Meaning: YouTube looks the other way.

V. The Victim’s Reality: No Recourse, No Review, No Human Accountability

As previously reported in "The Extraordinary Lengths YouTube, Google and GoFundMe Go to Ignore and Profit from Libel, Privacy Violations, Cyber-Bullying, Copyright Infringement, and Online Incitement to Harass", when creators are targeted by harassment channels:

YouTube won’t disclose the content of reports.

YouTube won’t provide timestamps.

YouTube won’t meaningfully investigate.

YouTube won’t de-escalate brigading.

YouTube won’t protect small channels from large ones.

YouTube won’t enforce its own three-strike system.

In most cases, creators are told:

“Please resolve this privately.”

As if:

harassment mobs,

defamation,

impersonation,

malicious thumbnails,

and coordinated attacks

can be “worked out” between a victim and the person exploiting them for profit.

It’s absurd on its face. And YouTube knows it.

But creators aren’t YouTube’s customers. Advertisers are.

VI. Why YouTube Protects Abusers

There is no mystery. The incentives are obvious:

➡ Abusive channels generate engagement.

Their audiences click, watch, comment, argue, fight, and share.

➡ Engagement generates ad revenue.

Even the negative attention is profitable.

➡ Enforcement costs money.

Humans must review cases. Lawyers get involved. Liability increases.

➡ Protecting abusers protects revenue streams.

Creators reporting harassment don’t generate profit. Drama channels do.

➡ The three-strike system exists for show, not enforcement.

A PR shield. A corporate firewall.

VII. How YouTube’s Failure Enables Real-World Harm

This is the part YouTube refuses to acknowledge:

Harassment channels don’t just harm creators online. They trigger real-life consequences.

Victims experience:

job loss

doxxing

swatting attempts

reputational harm

mental health crises

audience intimidation

permanent damage to their digital footprint

The platform knows this. Creators warn them repeatedly.

And YouTube’s silence continues.

Because acknowledgment would create liability. And liability is expensive.

VIII. What YouTube Should Fear Most

Not lawsuits. Not PR scandals. Not creator backlash.

But regulation.

The EU’s Digital Services Act and upcoming U.S. legislation are designed specifically to target platforms that:

ignore harassment,

fail to moderate,

allow repeat abuse,

and provide no transparency to victims.

YouTube may finally face what it has avoided for 15 years: enforce your rules, or lose your immunity.

IX. Closing: When Platforms Reward Abuse, Abuse Becomes the Product

YouTube has spent years pretending its harassment problem is isolated.

It isn’t. It’s systemic. It’s structural. It’s incentivized.

And it has given rise to an entire ecosystem of abusers who:

build careers on cruelty,

monetize misconduct,

weaponize their followers,

and rely on YouTube’s deliberate inaction to keep operating.

YouTube’s three-strike system works beautifully — just not for the people it claims to protect.

X. What Must Change: A Blueprint for Fixing YouTube’s Harassment Crisis

YouTube does not have a “creator behavior” problem. It has a platform architecture problem — and only systemic reform can fix it.

Below are eight concrete, achievable reforms that would immediately reduce abuse, increase accountability, and bring YouTube in line with its own stated policies and emerging regulatory frameworks.

These are not abstract ideals. They are practical changes YouTube could implement tomorrow — if it wanted to.

1. A Real Three-Strikes System — Applied to Everyone

The first and most obvious step:

Consistent enforcement.

A violation is a violation — whether committed by:

a millionaire drama channel,

a mid-tier political grifter,

or a small creator with 200 subscribers.

If the system cannot enforce rules against its most profitable offenders, then the system does not exist.

Fix:

A unified strike log viewable by creators

Automatic enforcement on strike accumulation

Zero manual exemptions for “high-engagement” channels

2. A Human Review Channel for Harassment and Defamation

Algorithmic enforcement fails because harassment is contextual.

YouTube needs:

✔ A dedicated human moderation team for harassment claims

✔ A 72-hour review SLA (service-level agreement)

✔ A transparent case outcome explainer

This is standard in every other modern tech platform.

YouTube is the outlier.

3. Unified Policy Enforcement:

No More Siloed Abuse Across Thumbnails, Videos & Community Posts

Right now, a creator can:

attack someone in a thumbnail,

defame them in a poll,

harass them in a livestream,

spread lies in the description,

and rally mobs in the comments

…and YouTube treats each one as a separate, unconnected incident.

This loophole keeps abusers alive.

Fix: One creator → One account → One unified behavior record.

Everything counts.

4. Copyright Protection for Individuals, Not Just Corporations

YouTube’s current copyright enforcement disproportionately protects:

Disney

Warner Bros

Universal

Major networks

But routinely ignores individuals whose:

personal photos,

film stills,

copyrighted headshots,

or professional images

are misused in defamation or harassment.

Fix:

A dedicated copyright form for individual creators

“Misuse of personal likeness” as a standalone category

Automatic removal of defamatory thumbnails using private images

Faster processing of individual copyright claims

5. Creator Safety Scores (Modeled After Trust & Safety Systems in Gaming Platforms)

YouTube already tracks everything creators do. It simply doesn’t use that data for safety.

Solution:

A Creator Conduct Score, based on:

harassment reports

doxxing attempts

copyright misuse

strike frequency

volume of removed comments

pattern of targeting specific individuals

Creators who repeatedly harm others should:

lose monetization,

lose privileges,

lose livestream access,

and eventually lose their accounts.

Safe platforms use conduct scoring. YouTube pretends it doesn’t exist.

6. A “Notoriety Shield” Against Brigading and Malicious Reporting

Abusers weaponize YouTube’s reporting tools.

Targets of harassment face:

mass-report attacks

false flagging

coordinated takedowns

manipulation campaigns

Fix:

Detect brigading patterns

Discount repeat reporters from linked IP/behavior clusters

Auto-escalate mass report incidents to human review

Restore visibility after false-flag takedowns

7. Transparency Reports for Creator vs. Platform Enforcement

Every quarter, YouTube should publish:Every quarter, YouTube should publish:

The number of harassment reports received

The number of harassment removals issued

The number of repeat-offender channels identified

The number of channels with more than two policy strikes

The number of cases where monetization or revenue influenced enforcement decisions

Average cycle time for reviewing serious complaints

Actions taken to protect small creators from larger, abusive creators

Sunlight is accountability. Right now, YouTube operates almost entirely in the dark.

Sunlight is accountability.

Right now, YouTube operates fully in the dark.

8. A Creator Bill of Rights

This should include:

The right to a human review

The right to a clear explanation for enforcement actions

The right to appeal

The right to protection from coordinated harassment

The right to privacy and likeness protection

The right to accurate moderation

The right to equal enforcement regardless of channel size

Every other industry with massive power imbalances has codified protections.

Creators deserve the same.

The Bottom Line:

YouTube Knows How to Fix This — It Just Hasn’t Been Forced To

None of these reforms are impossible. Most already exist at other platforms. Some already exist inside YouTube but are hidden from creators.

The problem is not technical. It’s not logistical. It’s not a question of “whether YouTube can.”

It is a question of whether YouTube will — and whether regulators, creators, journalists, and the public will force the issue.

Because as long as YouTube profits from abusive creators, the platform will continue enabling them.

Three strikes for thee. None for the people YouTube protects.

This is Part I of the Platform Accountability Files.

Next: Part II — GoFundMe

“Crowdfunding or Laundering Money? The Dark Architecture of GoFundMe’s Moderation Failure.”