Wikipedia's War on Creators - How the “Free Encyclopedia” Became the Web’s Most Hostile Platform for the People Who Make Culture

The Wiki Paradox, Part III

Wikipedia wants your money.

In fact, every year — without fail — the Foundation plasters the top of its pages with guilt-inducing banners implying the site is seconds away from collapse if you don’t chip in five dollars.

Meanwhile, behind the curtain?

The world’s “free encyclopedia” is waging an invisible war against the very people who build culture — artists, authors, musicians, filmmakers, journalists, historians, and creators of every kind.

Call it what it is:

Wikipedia has become the most hostile environment on the internet for the people who produce the knowledge and cultural output the site pretends to celebrate.

And the irony is blinding:

Creators are punished for contributing

Volunteers with no accountability control everything

Donors fund an organization that outsources nearly all labor to unpaid gatekeepers

And contributors are routinely attacked by the same editors the Foundation refuses to regulate

This isn’t a “bad apple” problem. It’s a systemic, cultural failure — one that has gone unchecked for 20 years.

The Great Wikipedia Paradox - Creators make the content, but volunteers control the narrative.

On paper, Wikipedia’s mission is noble:

“Gather the sum of all human knowledge.”

But in practice?

The platform operates like a closed guild, policed by entrenched volunteers who often:

distrust creators

resent subject-matter experts

assume bad faith

and wield policy like a blunt weapon

Creators who attempt to correct misinformation or add missing history are met with:

“COI” accusations

“PAID” accusations

“AI-generated” accusations

bureaucratic obstruction

hostility

mockery

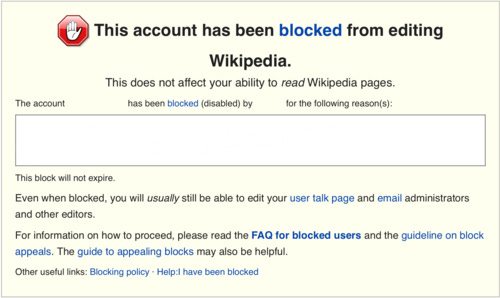

and sometimes, permanent bans

These attacks are not isolated. They are routine. Predictable. Documented across hundreds of discussions, forums, and editor complaints.

Creators are treated not as contributors — but as potential threats.

And it gets worse.

Why Wikipedia Distrusts Creators - The platform is built on a fundamental contradiction.

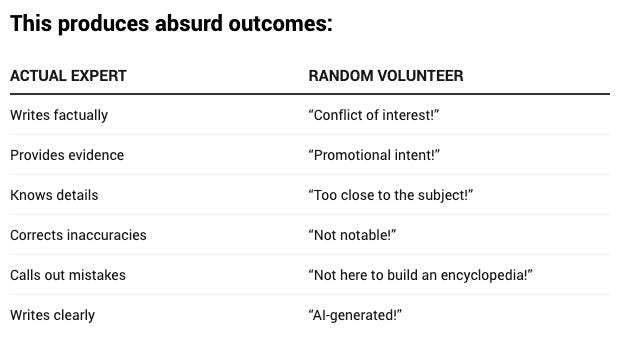

Wikipedia’s ideology assumes:

Anyone with firsthand knowledge is automatically biased.

But

Anyone with no knowledge is inherently neutral.

This is how Wikipedia ends up in the bizarre position of:

rejecting factual content

erasing legitimate history

punishing subject-matter experts

deleting pages about pre-internet works

dismissing physical archives

promoting digital bias

rewarding ignorance

empowering hostility

and ostracizing the creators whose work built the culture the site depends on

Creators aren’t just mistrusted. They are penalized for knowing too much.

Volunteers are empowered for knowing nothing.

This is how Wikipedia loses history — and why creators learn quickly:

You are welcome to read Wikipedia.

But you are not welcome to contribute.

The Violence of Volunteerism - Where is the money actually going?

Now for the part Wikipedia really doesn’t want discussed.

Every year, the Wikimedia Foundation raises $150–$180 million in global donations.

What do donors think they’re funding?

Fact-checkers

Editors

Moderators

People who improve articles

People who prevent abuse

What does the Foundation actually fund?

✔ Lawyers

✔ Lobbyists

✔ PR

✔ Branding

✔ Staff not involved in content

✔ Grants for unrelated projects

✔ Executive salaries

✔ Administrative overhead

What do they fund the least?

❌ Actual moderation

❌ Editor training

❌ Editorial staff

❌ Trust & safety intervention

❌ Cleanup of abusive behavior

❌ Accountability systems

❌ Support for creators

❌ Historical preservation

Who does the real editorial labor?

Unpaid volunteers

— many of whom:

never worked professionally in research, journalism, or culture

have no editorial oversight

enforce policies inconsistently

bully newcomers

engage in dogpiles

and operate without transparency or accountability

Worse:

Wikipedia’s paid Foundation staff have no authority to regulate the volunteer editors who run the site.

So:

Volunteers attack contributors

Contributors leave

Articles remain inaccurate

Donors assume their money hired people to prevent this

And Wikipedia continues begging for cash

The dysfunction is breathtaking.

The AI Paranoia Crisis - A new weapon for old gatekeepers

In the past year, a disturbing new trend has emerged:

Veteran editors now dismiss articulate writing as “AI-generated.”

It is the perfect smear because:

it requires no evidence

it delegitimizes instantly

it shuts down debate

it cannot be disproven

it polices style instead of substance

it gives veteran editors a pretext to block anyone

What used to be:

“You’re too close to the subject” has evolved into:

“You write too well — therefore you’re not human.”

It is:

the new witch hunt

the new “paid editor” smear

the new way to silence outsiders

the new power move for gatekeepers

AI paranoia has become the perfect excuse to block anyone they dislike.

The Real Victims - Creators of Pre-Digital History

The people most harmed by Wikipedia’s hostility are not spammers or marketers.

The people hit hardest by Wikipedia’s hostility are:

Independent creators

Analog-era artists

Journalists from the print era

Regional broadcasters

Local historians

Community documentarians

Anyone whose work predates Google

Wikipedia’s notability system — created after the digital revolution — cannot meaningfully evaluate culture that existed before the digital revolution. Anyone whose work existed before Google is treated as fiction.

And instead of updating the system, Wikipedia punishes those who try to bridge the gap.

It has erased:

local arts movements

independent film scenes

regional culture

early-career milestones

community histories

pre-internet journalism

analog broadcasts

grassroots creative ecosystems

Not because they lack cultural value — but because they lack digitized evidence. This produces a massive, ongoing erasure of entire cultural ecosystems.

Wikipedia routinely dismisses:

physical archives

newspaper listings

institutional catalogs

microfilm

broadcast ephemera

exhibition histories

early academic work

regional achievements

foundational innovations

analog media history

Not for lack of relevance.

But because the evidence isn’t readable by Google.

This is how Wikipedia becomes the web’s most powerful force for unintentional cultural erasure.

The Reddit Meltdown That Proved The Point - When gatekeepers leave their home turf, the behavior goes with them

Shortly after publishing Part II, a link shared on r/Wikipedia triggered a meltdown so predictable and so on-brand that it deserves documentation.

One user — immediately and aggressively — responded with:

accusations of being an AI

accusations of being a paid editor

tone-policing

identity-policing

conspiracy theories

hostility toward articulate writing

wild assumptions about intent

demands for personal disclosure

attempts to “unmask” the author

claims that criticism of Wikipedia is evidence of wrongdoing

It took two comments before the moderator locked the thread:

“Since this post is going to draw more heat than light, I’m locking this thread.”

The incident was not a distraction — it was data.

It demonstrated:

the same hostility

the same pack behavior

the same refusal to engage ideas

the same identity fixation

the same weaponization of suspicion

the same anti-creator bias

Wikipedia’s culture doesn’t stay on Wikipedia. It spreads into every space its defenders occupy.

Wikipedia Will Not Fix Itself - Because the Foundation benefits from the dysfunction.

Wikipedia thrives on a myth:

“We’re a nonprofit.

We’re broke.

We need your help.”

Meanwhile, the Foundation is sitting on hundreds of millions in assets, and the people making the site unbearable? They’re unpaid, unregulated, and unmonitored.

It’s the digital equivalent of:

volunteers patrolling a library

using hostility to keep people out

while the librarians keep asking for donations

to fix a problem the volunteers created

The Foundation gets the money. The volunteers get the power. The creators get the abuse.

It’s a perfect system — for everyone except the public.

What Comes Next - The Platform Accountability Files

This article is Part III in our ongoing investigative series into platforms that:

weaponize their users

enable abuse

distort truth

and hide behind “community standards”

Upcoming installments include:

YouTube

“Three Strikes for Thee, Never for Me: How YouTube Enables Abuse by Protecting Repeat Offenders”

GoFundMe

“Crowdfunding or Laundering? The Dark Architecture Behind GoFundMe’s Moderation Failure”

Meta / Facebook

“Shadowbans, Fraud Ads, and Community Collapse: Why Meta Cannot Police Itself”

Reddit

“The Tyranny of Volunteer Mods: How Reddit Outsourced Governance to Unaccountable Mini-Dictators”

The Broader Crisis

“How Tech Platforms Became Unaccountable Institutions That No Longer Serve the Public”

Each installment will:

expose platform failures

examine real cases

document systemic abuse

reveal financial incentives

call out hypocrisy

propose reforms

The Platform Accountability Files — YouTube “Three Strikes for Thee, Never for Me: How YouTube Enables Abuse by Protecting Repeat Offenders” — coming next.

Stay tuned.